Recent review of vulnerabilities in the Ray framework uncovered the unpatched flaw, dubbed ShadowRay. It appears that hundreds of machine learning clusters were already compromised, leading to the leak of ML assets. Researchers trace the first attack that used this vulnerability to September 2023, meaning that the vulnerability already circulates for over half a year.

Shadow Ray Vulnerability Allows for RCE

Ray, one of the most popular open source AI frameworks, contains a severe vulnerability, with hundreds of exploitation cases known at the moment. The research of Oligo Security uncovers the peculiar story of CVE-2023-48022: it was originally detected together with four others back in December 2023. While Anyscale, the developer, managed to fix the rest pretty quickly, one became a subject of discussions. The devs stated it is an intended behavior and not a bug, refusing to fix the issue.

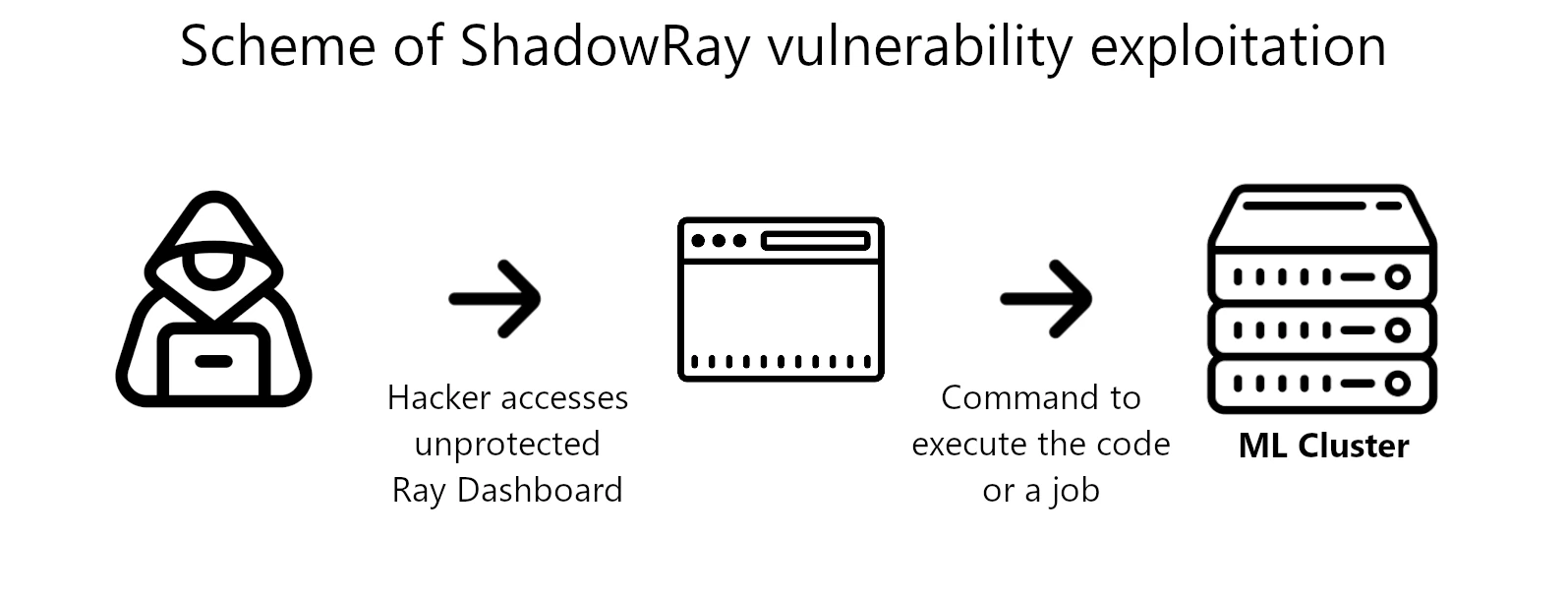

CVE-2023-48022, coined ShadowRay, is a remote code execution flaw that stems from lack of authorization in Jobs API. The latter in fact allows anyone to create jobs for the cluster after accessing the dashboard. Among the possible jobs is code execution – a function the users need quite often in the typical workflow. This in fact was the point of controversy when another research team discovered the flaw in 2023. Anyscale insists that security around the framework and all its assets should be established by the users.

Remote code execution vulnerabilities are one of the most severe out there, as they in fact allow for simultaneous code execution on several machines. In this specific case, it is not workstations that are in danger, but ML clusters, with all the computing power and data they have.

How Critical is This Flaw?

As I said, the Ray framework is among the most popular ones for handling AI workloads. Among its users are loud names like Amazon, Netflix, Uber, Spotify, LinkedIn and OpenAI, though there are hundreds and thousands of smaller companies. Their GitHub repository boasts of over 30k stars, meaning that the total user count definitely exceeds this number. So yes, the attack surface is pretty significant.

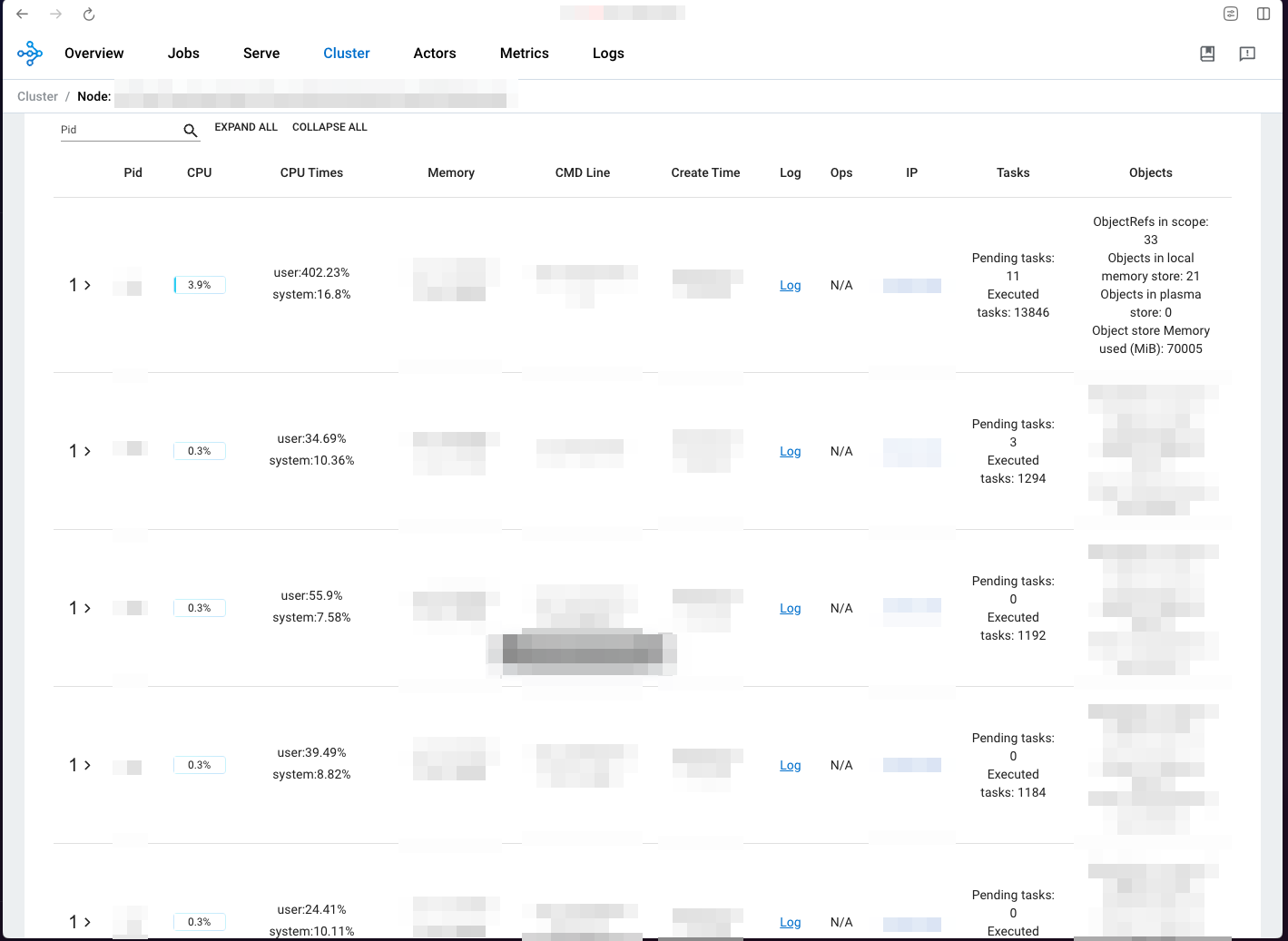

Much worse things surface when we think about what exactly is compromised. When compared to workstations, corporate networks and servers, machine learning clusters are completely different. They are powerhouse systems, with ML workloads oriented hardware and related data, like access tokens, credentials to the connected apps, and so on. Numerous system that keep such info are interconnected using Ray framework. So a successful exploitation of ShadowRay effectively equals accessing the entirety of all this.

Despite being oriented towards AI workloads, hardware, more specifically GPUs, are still usable for other workloads. In particular, upon accessing the ML cluster, frauds can deploy coin miner malware that would fill their purses at the expense of the victim company. But what is more concerning here is the possibility of dataset leak. Quite a few companies learn their AIs using their own unique developments, or the selection of carefully picked data. Leaking corporate secrets may be critical for large companies, and fatal for smaller ones.

ShadowRay Vulnerability Exploited in the Wild

The most unfortunate part about the ShadowRay flaw is that it is already exploited in real-world attacks. Moreover, hackers most likely exploited it way before its discovery. The original research says the first exploitation cases happened back in September 2023. However, they did not stop, as there were also attacks that happened less than a month ago – in late February 2024.

Among the visible consequences of the attack were malicious coin miners that exploited the powerful hardware of hacked clusters. Hackers particularly deployed XMRig, NBMiner and Zephyr malicious miners. All of them were running off the land, meaning that static analysis was practically useless against this malware.

Less obvious, but potentially more critical was the leak of data kept on the clusters. I am talking not only about the datasets, but also workflow related information, like passwords, credentials, access tokens, and even cloud environments access. From this point of view, this is rather similar to compromising a server that handles the workflow of a software developing team.

ShadowRay Fixes Are Not Available

As I’ve mentioned above, Anyscale does not agree with the definition of absent input authentication in Jobs API as vulnerability. They believe that the user should take care about the security of the Ray framework. And I somewhat agree with this, with only one caveat: the need for a visible warning about such a “feature” during the setup. When it comes to the scale of OpenAI or Netflix, such shortcomings are inacceptable.

At the moment, the best mitigation is to filter the access to the dashboard. A properly configured firewall will fit well for this purpose. Experts also offer to set up the authentication to the Ray Dashboard port (8265), effectively fixing the vulnerability.

Use advanced security solutions that will be able to detect memory threats as well as malware on the disk. In almost all attack cases, adversaries did not leave any files on the disk, performing the attack in the LOTL form. EDR/XDR solutions may look costly, but recovering after the hack of all company’s assets costs more, both in monetary and reputational terms.