Slopsquatting is a new type of cyber threat that takes advantage of mistakes made by AI coding tools, particularly LLMs that can “hallucinate”. In this post, we’ll break down this new type of attack, find out why it can occur, dispel some myths, and figure out how to prevent it.

Slopsquatting – New Techniques Against AI Assisted Devs

Slopsquatting is a supply chain attack that leverages AI-generated “hallucinations” — instances where AI coding tools recommend non-existent software package names. The term draws parallels with typosquatting, where attackers register misspelled domain names to deceive users.

In slopsquatting, however, the deception stems from AI errors rather than human mistakes. The term combines “slop”, referring to low-quality or error-prone AI output, and “squatting”, the act of claiming these hallucinated package names for malicious purposes.

It is a rather unexpected cybersecurity threat that exploits the limitations of AI-assisted coding tools, particularly large language models. As developers increasingly rely on these tools to streamline coding processes, the risk of inadvertently introducing malicious code into software projects grows. Hackers can then create malicious packages with these fake names and upload them to public code repositories.

Mechanics of Slopsquatting

The process of slopsquatting unfolds in several stages. First, LLMs, such as ChatGPT, GitHub Copilot, or open-source models like CodeLlama, generate code or suggest dependencies. In some cases, they recommend package names that do not exist in public repositories like PyPI or npm. These hallucinated names often sound plausible, resembling legitimate libraries (e.g., “secure-auth-lib” instead of an existing “authlib”).

Stage 2 begins when the developers, fully trusting the AI’s recommendations, run the code, assuming all the packages it refers to are legitimate. Normally, this ends up with build failures or broken functionality of certain parts of the resulting program. Developers might waste time debugging errors, searching for typos, or trying to figure out why a dependency isn’t resolving, but it is only about AI assistants being delusional about certain packages existing.

In the worst case scenario, which becomes more and more prevalent, the hallucinated name is already taken by a malicious repository. Con actors specifically target false names that appear in AI generated code, or try picking a name similar to what AI can generate, hoping to get their prey in future. As a result, what may look like a flawless build process in fact installs malware to the developer’s system.

The bad part about it is that these hallucinations are not random, and thus predictable. A study by researchers analyzed 16 LLMs, generating 576,000 Python and JavaScript code samples. They found that 19.7% (205,000) of recommended packages were non-existent. Notably, 43% of these hallucinated packages reappeared in 10 successive runs of the same prompt, and 58% appeared more than once, suggesting a level of predictability that attackers can exploit.

This is where the fun begins. Cybercriminals identify these hallucinated package names, either by analyzing AI outputs or predicting likely hallucinations based on patterns. They then create malicious packages with these names and upload them to public repositories. This is the worst version of the scenario described in the two paragraphs above.

As a result, this introduces malware into developer’s projects, which can compromise software security, steal data, or disrupt operations. In some cases, it can even serve as a backdoor for future attacks, allow lateral movement across systems, or lead to the compromise of an entire software supply chain.

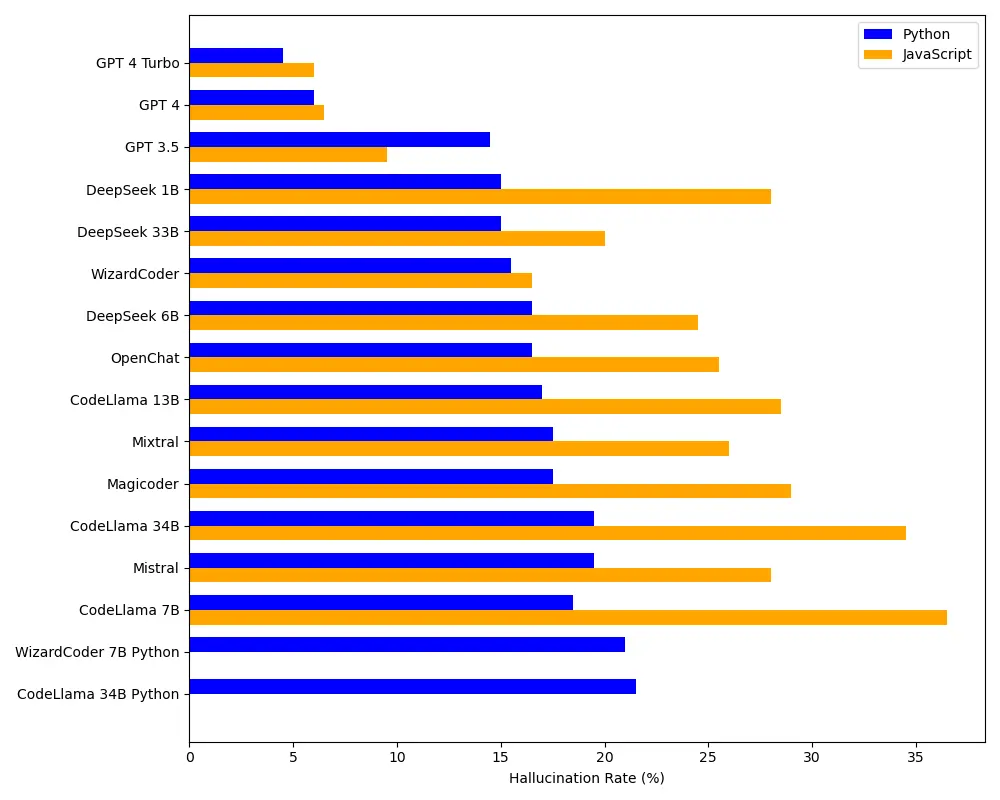

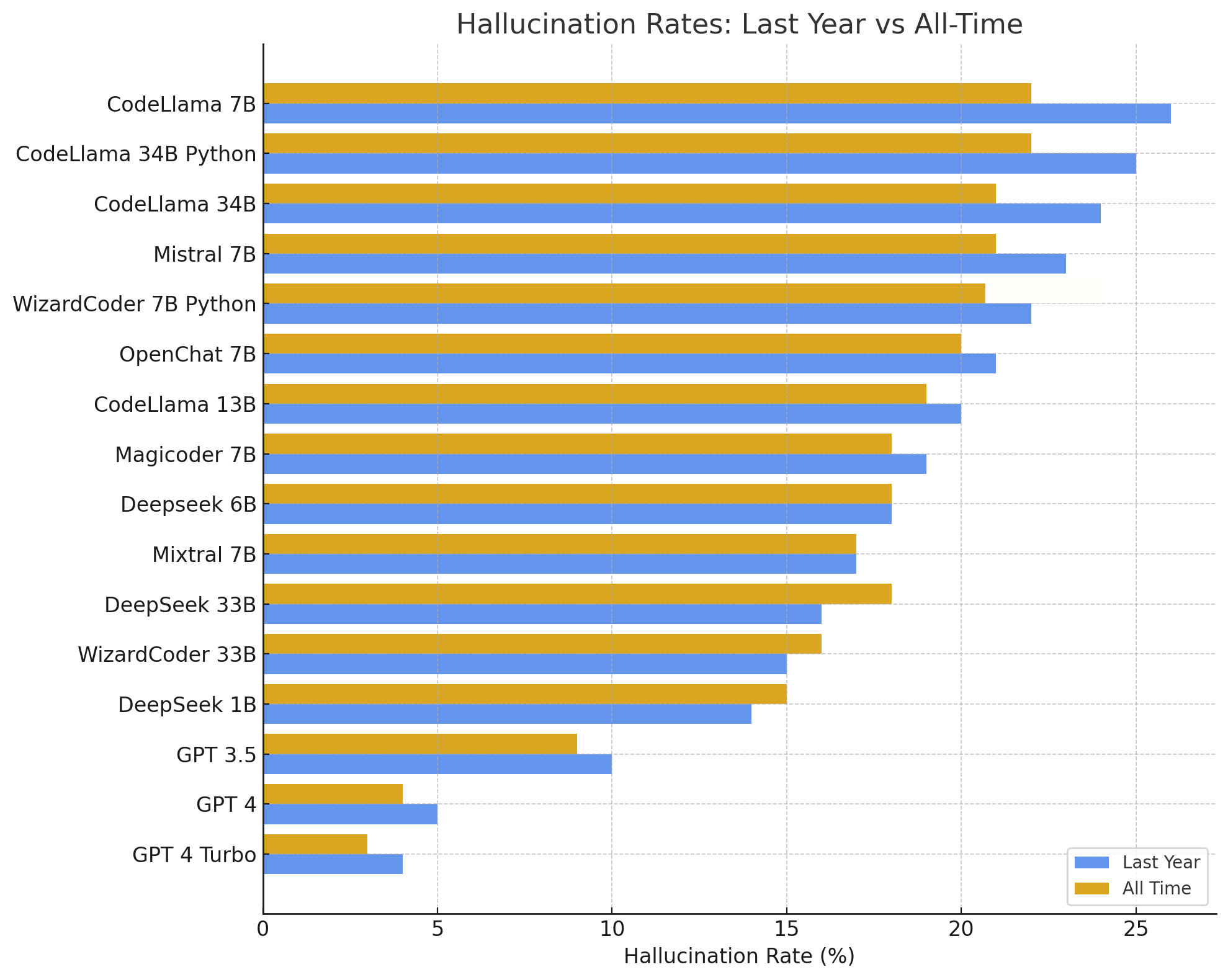

Prevalence and Variability Across AI Models

The frequency of package hallucinations varies a lot depending on the AI model. Open-source models, such as CodeLlama and WizardCoder, tend to hallucinate more often, with an average hallucination rate of 21.7%. For example, CodeLlama hallucinated over 33% of the time. On the other hand, commercial models like GPT-4 Turbo perform much better, with a hallucination rate of just 3.59%. In general, GPT models are about four times less likely to hallucinate compared to open-source ones.

When it comes to how believable these hallucinations are, around 38% of the fake package names are moderately similar to real ones, and only 13% are just simple typos. That makes them pretty convincing to developers. So, even though commercial models are more reliable, no AI is completely immune to hallucinations—and the more convincing these fakes are, the bigger the risk.

Potential Impact

Despite the fact that massive human downsizing is underway in favor of using AI, slopsquatting shows that the complete replacement of humans by artificial intelligence is unlikely to happen anytime soon. If a widely-used AI tool keeps recommending a hallucinated package, attackers could use that to spread malicious code to numerous developers, making the attack much more effective.

Another issue is trust — developers who rely on AI tools might not always double-check if the suggested packages are legit, especially when they’re in a hurry. That trust makes them more vulnerable.

While there haven’t been any confirmed slopsquatting attacks in the wild as of April 2025, the technique is seen as a real threat for the future. It’s similar to how typosquatting started out as just a theoretical concern and then became a widespread problem. The risk is made worse by things like rushed security checks—something OpenAI has been criticized for. As AI tools become a bigger part of development workflows, the potential damage from slopsquatting keeps growing.

Preventive Measures Against Slopsquatting

To reduce the risk of slopsquatting, developers and organizations can take several practical steps. First, it’s important to verify any package recommended by an AI — check if it actually exists in official repositories and review things like download numbers and the maintainer’s history.

Continuing the previous paragraph, good code review practices are essential too. Catching weird or incorrect suggestions before the code goes live can save a lot of headaches. On top of that, developers should be trained to stay aware of the risks that come with AI hallucinations and not blindly trust everything the AI spits out.

Having runtime security measures in place can help detect and stop malicious activity from any compromised dependencies that do sneak through. I recommend GridinSoft Anti-Malware as a reliable solution for personal security: its multi-component detection system will find and eliminate even the most elusive threat, regardless of the way it is introduced. Download it by clicking the banner below and get yourself a proper protection today.