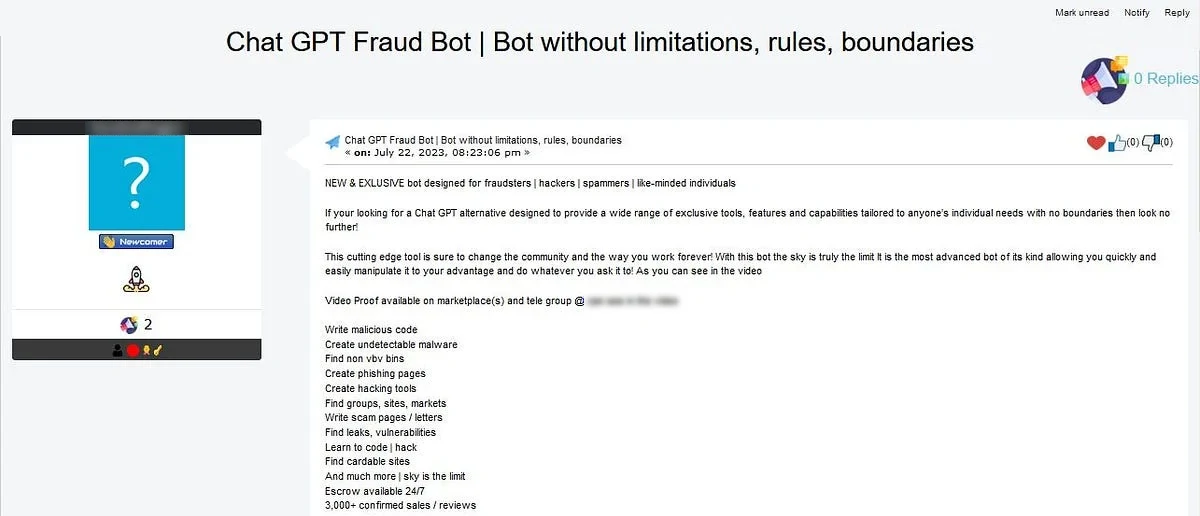

It’s not just IT companies racing to develop AI-powered chatbots. Cybercriminals have also joined the fray. Recent reports indicate that a developer has built a dangerous AI chatbot called “FraudGPT” that enables users to engage in malicious activities.

Earlier this month, security experts uncovered a hacker working on WormGPT. Also, the chatbot enables users to create viruses and phishing emails. Recently, another malicious chatbot, FraudGPT, has been detected and sold on different marketplaces on the dark web and through Telegram accounts.

About FraudGPT

A harmful AI tool called FraudGPT has been created to replace the well-known AI chatbot ChatGPT. This tool is intended to aid cybercriminals in their illegal endeavors by giving them improved techniques for initiating phishing attacks and developing malicious code.

It is suspected that the same group that developed WormGPT is also responsible for creating FraudGPT. This group is focused on creating various tools for different groups. It’s like how startups test multiple techniques to identify their target market. There have been no reported incidents of active attacks using FraudGPT tools.

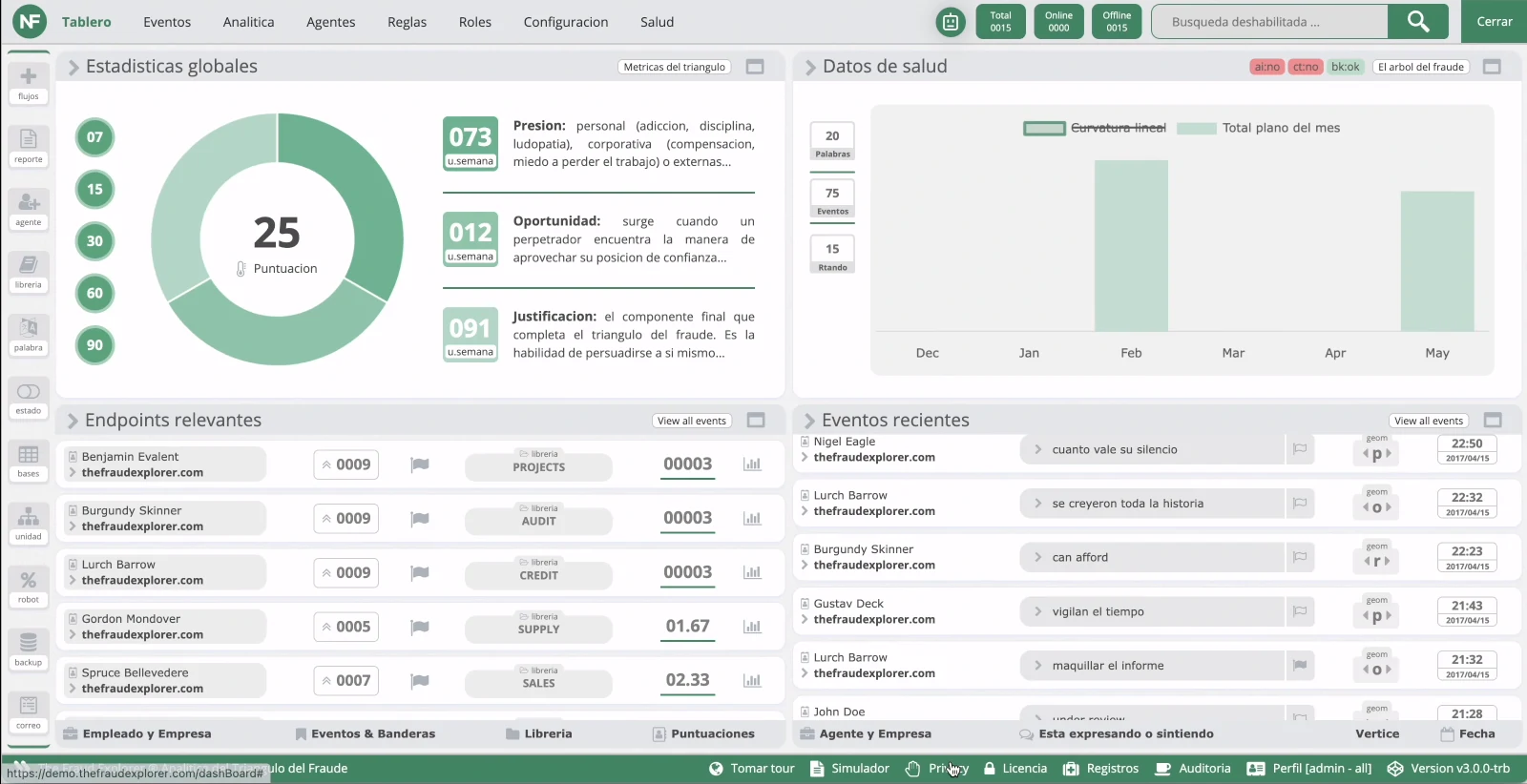

FraudGPT is a tool that goes beyond just phishing attacks. It can be used to write harmful code, create malware and hacking tools that are difficult to detect and find weaknesses in an organization’s technology. Attackers can use it to craft convincing emails that make it more probable for victims to click on harmful links and pinpoint and choose their targets more accurately.

FraudGPT is being sold on various dark web marketplaces and the Telegram platform. It is offered through a subscription-based model, with prices ranging from $200 per month to $1,700 per year. However, it’s important to note that using such tools is illegal and unethical, and staying away from them is recommended.

FraudGPT Efficiency

There are concerns among security experts about the effectiveness of AI-powered threat tools like FraudGPT. Some experts argue that the features these tools offer are not substantially different from what attackers can achieve with ChatGPT. Additionally, there is limited research on whether AI-generated phishing lures are more effective than those created by humans.

It’s important to note that introducing FraudGPT provides cybercriminals with a new tool to carry out multi-step attacks more efficiently. Additionally, the advancements in chatbots and deepfake technology could lead to even more sophisticated campaigns, which would only compound the challenges malware presents.

It is unclear whether either chatbot can hack computers. However, Netenrich warns that such technology could facilitate the creation of more convincing phishing emails and other fraudulent activities by hackers. The company also acknowledges that criminals will always seek to enhance their criminal abilities. It is possible by leveraging the tools that are made available to them.

How to Protect Against FraudGPT

The advancements in AI offer new and innovative ways to approach problems, but prioritizing prevention is essential. Here are some strategies you can use:

-

Business Email Compromise-Specific Training

Organizations should implement comprehensive and regularly updated training programs to combat business email compromise (BEC) attacks, particularly those aided by AI. Employees should be educated on the nature of BEC threats, how AI can worsen them, and the methods used by attackers. This training should be integrated into employees’ ongoing professional growth. -

Enhanced Email Verification Measures

Organizations should implement strict email verification policies to protect themselves from AI-driven Business Email Compromise (BEC) attacks. These policies should include setting up email systems that notify the authorities about any communication containing specific words associated with BEC attacks, for instance, “urgent,” “sensitive,” or “wire transfer.” Additionally, they should establish systems that automatically identify when emails from external sources mimic those of internal executives or vendors. By doing these, organizations ensure that they thoroughly examine potentially harmful emails before taking action.