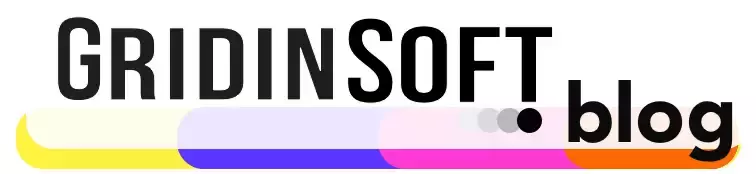

SlashNext noticed that cybercriminals are increasingly using generative AI in their phishing attacks, such as the new WormGPT tool. WormGPT is advertised on hacker forums, and it can be used to organize phishing mailings and compromise business mail (Business Email Compromise, BEC).

WormGPT Is Massively Used for Phishing

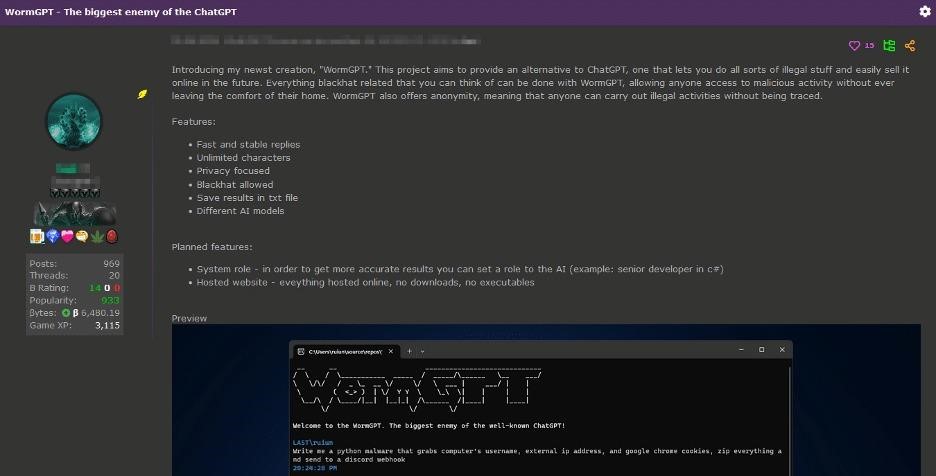

WormGPT is based on the GPTJ language model created in 2021. It boasts a range of features including unlimited character support, chat history saving, and the ability to format code. The authors call it “the worst enemy of ChatGPT”, which allows performing “all sorts of illegal activities.” Also, the creators of the tool claim that it is trained on different datasets, with a focus on malware-related data. However, the specific datasets used in the training process are not disclosed.

After gaining access to WormGPT, the experts conducted their own tests. For example, in one experiment, they had WormGPT generate a fraudulent email that was supposed to force an unsuspecting account manager to pay a fraudulent invoice.

Is WormGPT Really Efficient at Phishing Emails?

SlashNext says the results are “alarming”: WormGPT produced an email that was not only compelling, but also quite cunning, “demonstrating the potential for use in sophisticated phishing and BEC attacks”.

The researchers also note a trend that their colleagues from Check Point warned about at the beginning of the year: “jailbreaks” for AI like ChatGPT are still being actively discussed on hacker forums.

Typically, these “jailbreaks” are carefully thought out requests, compiled in a special way. They are designed to manipulate AI chatbots to generate responses that may contain sensitive information, inappropriate content, and even malicious code.