According to vulnerability and risk management company Vulcan Cyber, attackers can manipulate ChatGPT to distribute malicious packages to software developers.

Let me remind you that we also said that ChatGPT has become a New tool for Cybercriminals in Social Engineering, and also that ChatGPT Causes New Wave of Fleeceware.

IS specialists also noticed that Amateur Hackers Use ChatGPT to Create Malware.

The problem is related to the AI hallucinations that occur when ChatGPT generates factually incorrect or nonsensical information that may look plausible. Vulcan researchers noticed that ChatGPT, possibly due to the use of outdated training data, recommended code libraries that do not currently exist.

The researchers warned that cybercriminals could collect the names of such non-existent packages and create malicious versions that developers could download based on ChatGPT recommendations.

In particular, Vulcan researchers analyzed popular questions about the Stack Overflow platform and asked these ChatGPT questions in the context of Python and Node.js.

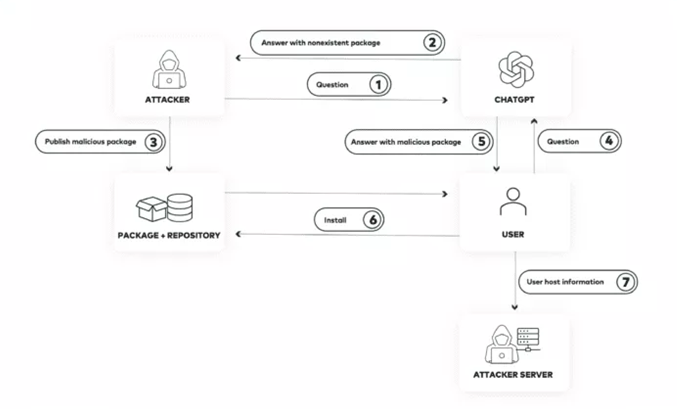

Attack scheme

Experts asked ChatGPT over 400 questions, and about 100 of its answers contained links to at least one Python or Node.js package that doesn’t actually exist. In total, more than 150 non-existent packages were mentioned in ChatGPT responses.

Knowing the names of packages recommended by ChatGPT, an attacker can create malicious copies of them. Because the AI is likely to recommend the same packages to other developers asking similar questions, unsuspecting developers can find and install a malicious version of a package uploaded by an attacker to popular repositories.

Vulcan Cyber has demonstrated how this method would work in a real-world setting by creating a package that can steal system information from a device and load it into the NPM registry.

Given how hackers attack the supply chain by deploying malicious packages to known repositories, it’s important for developers to check the libraries they use to make sure they’re safe. The security measures are even more important with the emergence of ChatGPT, which can recommend packages that don’t really exist or didn’t exist before the attackers created them.

C’est Quoi le nom du ai pour les hacker