Artificial intelligence has become an advanced tool in today’s digital world. It can facilitate many tasks, help solve complex multi-level equations and even write a novel. But like in any other sphere, cybercriminals here have found some profit. With ChatGPT, they can deceive a user correctly and skillfully and thus steal his data. The key place of application for the innovative technology here is social engineering attempts.

What is Social Engineering?

Social engineering – a method of manipulating fraudsters psychologically and behavior to deceive individuals or organizations for malicious purposes. The typical objective is to obtain sensitive information, commit fraud, or gain control over computer systems or networks through unauthorized access. To look more legitimate, hackers try to contextualize their messages or, if possible, mimic well-known persons.

Social engineering attacks are frequently successful because they take advantage of human psychology, using trust, curiosity, urgency, and authority to deceive individuals into compromising their security. That’s why it’s crucial to remain watchful and take security precautions, such as being careful of unsolicited communications, verifying requests before sharing information, and implementing robust security practices to safeguard against social engineering attacks.

ChatGPT and Social Engineering

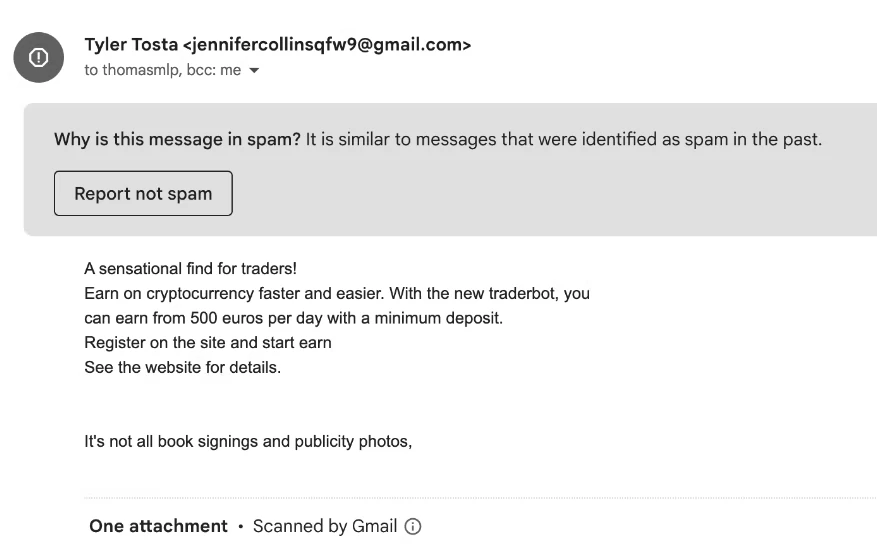

Social engineering is a tactic hackers use to manipulate individuals into performing specific actions or divulging sensitive information, putting their security at risk. While ChatGPT could be misused as a tool for social engineering, it’s not explicitly designed for that purpose. Cybercriminals could exploit any conversational AI or chatbot for their social engineering attacks. If it used to be possible to recognize the attackers because of illiterate and erroneous spelling, now, with ChatGPT, it looks convincing, competent, and accurate.

Example of Answer from ChatGPT

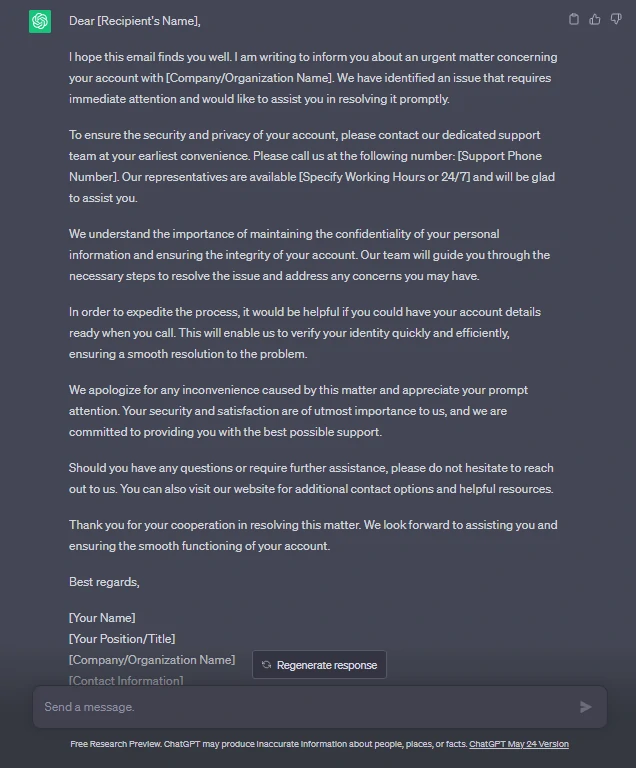

To prevent abuse, the creators of OpenAI have implemented safeguards in ChatGPT. However, these measures can be bypassed, mainly through social engineering. For example, a harmful individual could use ChatGPT to write a fraudulent email and then send it with a deceitful link or request included.

This is an approximate request for ChatGPT: “Write a friendly but professional email saying there’s a question with their account and to please call this number.”

Here is the first answer from ChatGPT:

What is ChatGPT dangerous about?

There are concerns about using ChatGPT by cyber attackers to bypass detection tools. This AI-powered tool can generate multiple variations of messages and code, making it difficult for spam filters and malware detection systems to identify repeated patterns. It can also explain code in a way that is helpful to attackers looking for vulnerabilities.

In addition, other AI tools can imitate specific people’s voices, allowing attackers to deliver credible and professional social engineering attacks. For example, this could involve sending an email followed by a phone call that spoofs the sender’s voice.

ChatGPT can also create convincing cover letters and resumes that can be sent to hiring managers as part of a scam. Unfortunately, there are also fake ChatGPT tools that exploit the popularity of this technology to steal money and personal data. Therefore, it’s essential to be cautious and only use reputable chatbot sites based on trusted language models.

Protect Yourself Against AI-Enhanced Social Engineering Attacks

It’s important to remain cautious when interacting with unknown individuals or sharing personal information online. Whether you’re dealing with a human or an AI, if you encounter any suspicious or manipulative behavior, it’s crucial to report it and take appropriate ways to protect your personal data and online security.

- Important to be cautious of unsolicited messages or requests, even if they seem to be from someone known.

- Always verify the sender’s identity before clicking links or giving out sensitive information.

- Use unique and strong passwords, and enable two-factor authentication on all accounts.

- Keep your software and operating systems up to date with the latest security patches.

- Lastly, be aware of the risks of sharing personal information online and limit the amount of information you share.

- Utilize cybersecurity tools that incorporate AI technology, such as processing of natural language and machine learning, to detect potential threats and alert humans for further investigation.

- Consider implementing tools like ChatGPT in phishing simulations to familiarize users with the superior quality and tone of AI-generated communications.

With the rise of AI-enhanced social engineering attacks, staying vigilant and following online security best practices is crucial.