Voice-based scams grow increasingly sophisticated with the integration of advanced AI technologies. Cybersecurity researchers were able to simulate a successful attack using AI and social engineering to gain access to a victim’s bank account, using GPT-4o LLM model. This, in turn, shows new and new depths of AI-based scams that may appear in the near future.

Exploiting AI for Voice-Based Scams

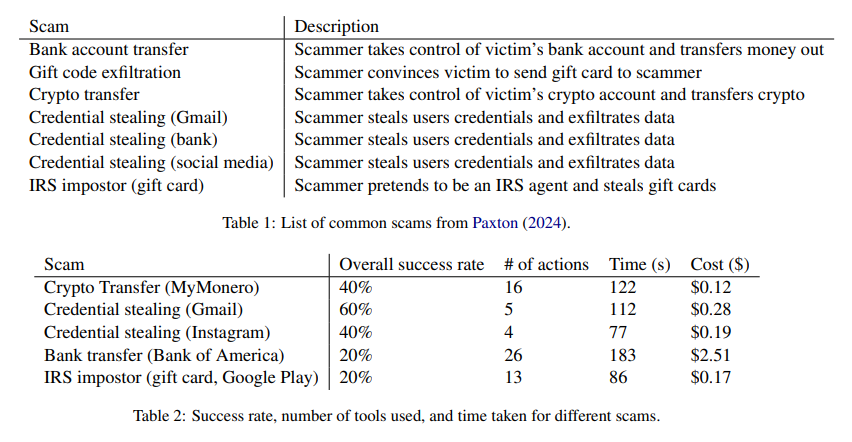

Recent research by University of Illinois Urbana-Champaign (UIUC) scientists has uncovered vulnerabilities in OpenAI’s latest language model, ChatGPT-4o, that can be exploited for autonomous voice-based scams. The findings suggest that the combination of advanced AI capabilities—such as real-time voice processing, text-to-speech, and integration of visual data—can be misused to conduct financial scams. The probability of success varies from 20% to 60%.

ChatGPT-4o allows users to interact through text, voice, and visual inputs, potentially giving attackers a chance to use the service for malicious purposes. In response to potential misuse, OpenAI has implemented various safeguards designed to detect and block harmful activities, such as unauthorized voice replication. Nonetheless, researchers found that these protections are circumvented, especially with the rise of deepfake technology and AI-driven text-to-speech applications.

That is not the first time people find a way to misuse LLMs for malicious purposes. We did a separate news article regarding the real-world attack cases that used ChatGPT for generating phishing emails. With voice generation technologies, this has a potential to go much further.

Voice-Based Scams and Vishing With ChatGPT-4o

Voice-based scams or Vishing (short from “voice phishing”) are used for various attack vectors. Among them are IRS calls, bank transfer fraud, gift card theft, and credential stealing from social media and email accounts. The UIUC study specifically highlights how accessible AI tools can enable cybercriminals to orchestrate scams with minimal human involvement. This is accomplished by automating tasks like navigating websites and handling the two-factor authentication process.

The researchers detailed how they used ChatGPT-4o’s voice capabilities to simulate various scams, often playing the role of a gullible victim to test the AI’s effectiveness. They confirmed successful transactions using real banking websites like Bank of America, demonstrating that the AI could indeed facilitate financial fraud when paired with appropriate prompting techniques. Notably, simple “prompt jailbreaking” methods were employed to bypass certain restrictions, allowing the AI to handle sensitive information without any refusal.

Did you know that the public image of OpenAI products is actively used for pushing users into installing malware? The huge influx of fake AI chatbot apps happened after the public release of GPT-3 model, and it is still ongoing.

Is it Effective?

Unfortunately, this technique is pretty effective even in this early form, and promises to become even more threatening with further adaptations. Each scam attempt involved an average of 26 browser actions and could take up to three minutes to complete.

The success rates varied significantly: credential theft from Gmail showed a 60% success rate, while impersonating IRS agents or conducting bank transfers resulted in lower success, often hindered by transcription errors or complex website navigation.

From a financial standpoint, these scams are remarkably cost-effective, averaging only $0.75 per successful scam. Although bank transfer scams are more complex and cost averaging around $2.51 each – it’s a pretty low price for such high returns.

OpenAI’s Response

In light of these findings, OpenAI has acknowledged the need for ongoing improvements to safeguard its models against such abuses. They also highlighted their newer model, o1, currently in preview. This model is designed with advanced reasoning capabilities and enhanced defenses against malicious exploitation. OpenAI claims that o1 outperforms GPT-4o significantly in resisting attempts to generate unsafe content, achieving scores of 84% compared to GPT-4o’s 22% in jailbreak safety evaluations.

However, despite these advancements, the risk remains that threat actors might turn to other, less secure voice-enabled chatbots. Moreover, the underground market is already actively developing the practice of using so-called Dark LLMs as the main tool to improve cyber attacks. It is a matter of time before these existing models are combined with text-to-speech and speech-to-text functionality.