Wiz Research discovered a detailed DeepSeek database containing sensitive information, including user chat history, API keys, and logs. Additionally, it exposed backend data with internal details about infrastructure performance. Yes, the unprotected data was openly lying in the public domain, so it is far beyond the high-profile leak.

DeepSeek AI Data Breach: Over a Million Log Entries and Sensitive Keys Exposed

DeepSeek, a rapidly rising Chinese AI startup that has become worldwide known in just a few days for its open-source models, has found itself in hot water after a major security lapse. Researchers at Wiz discovered that DeepSeek left one of its ClickHouse databases publicly accessible on the internet, potentially allowing unauthorized access to sensitive internal data. Wouldn’t it be ironic if an AI company that claims to be smarter than humans couldn’t even secure its own database?

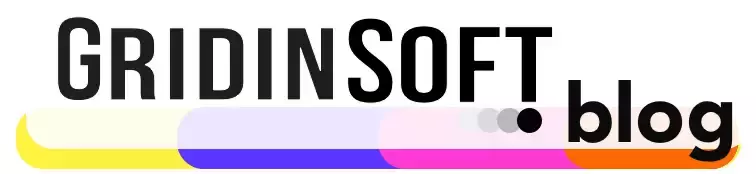

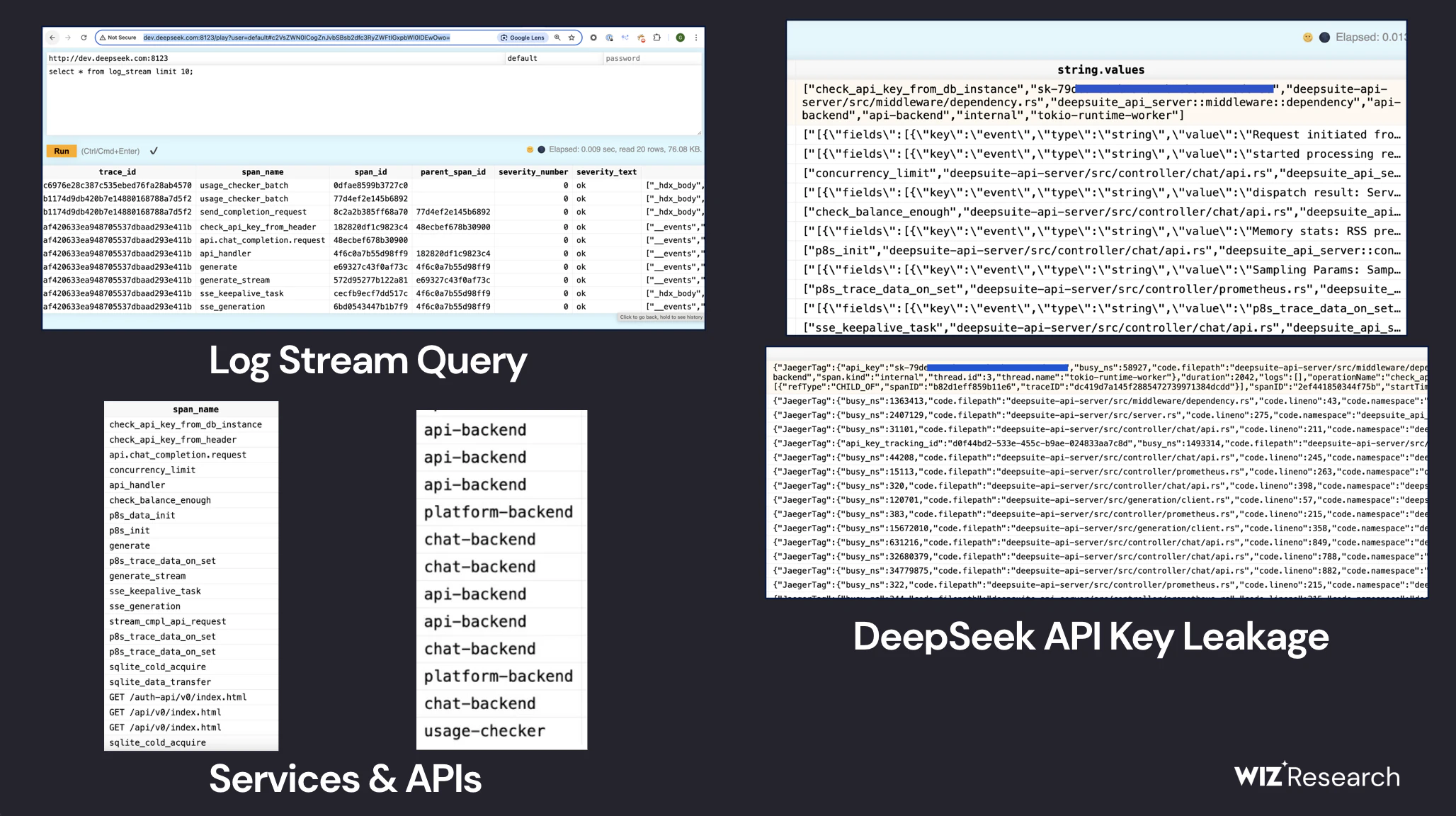

The exposed database contained over a million log entries, including chat history, backend details, API keys, and operational metadata—essentially the backbone of DeepSeek’s infrastructure. API secrets, in particular, are highly sensitive because they act as authentication tokens for accessing services. If compromised, attackers could exploit these keys to manipulate AI models, extract user data, or even take control of internal systems.

How Was the Data Accessed?

DeepSeek’s system ran on ClickHouse, an open-source columnar database optimized for handling large-scale data analytics. The database was hosted at oauth2callback.deepseek[.]com:9000 and dev.deepseek[.]com:9000, and required no authentication to access. This means that anyone who discovered the exposed endpoints could connect and potentially extract or alter the data at will.

ClickHouse supports an HTTP interface, which allows users to run SQL queries directly from a web browser or command line without needing dedicated database management software. Because of this, any attacker who knew the right queries could potentially extract data, delete records, or escalate their privileges within DeepSeek’s infrastructure.

Wiz researcher Gal Nagli pointed out that while much of AI security discourse focuses on future risks (like AI model manipulation and adversarial attacks), the real-world threats often stem from elementary mistakes, like exposed databases.

As Nagli rationally notes, AI firms must prioritize data protection by working closely with security teams to prevent such leaks. If attackers had gained access to DeepSeek’s logs, they could have harvested API keys to exploit AI services. They could also analyze chat logs to extract user data and private interactions. Additionally, they might manipulate internal settings to alter how models operate.

So What Now?

Despite such seemingly high-profile failures, the service still works great, as evidenced by the statistics of app downloads from official app stores. However, apart from this incident, those concerned about data security have some questions for the service. Its privacy policies are under investigation, particularly in Europe, due to questions about its handling of user data. As a Chinese AI company, DeepSeek is also being examined by U.S. authorities for potential national security risks.

Additionally, OpenAI and Microsoft suspect that DeepSeek may have used OpenAI’s API without permission to train its models via distillation—a process where AI models are trained on the output of more advanced models rather than raw data. The Italian data protection authority, Garante, recently demanded information on DeepSeek’s data collection practices, leading to its apps becoming unavailable in Italy. Meanwhile, Ireland’s Data Protection Commission (DPC) has made a similar request.